As machine learning models increasingly assist in interpreting patient records, understanding how to properly evaluate these models becomes crucial for ensuring reliable clinical research outcomes. Jay Nanduri, Truveta CTO, provided an overview of Truveta language models and AI infrastructure and an overview of our approach to quality in previous blog posts. In this post, we deep dive into the nuances of our framework for AI evaluation: sampling clinical data, selecting quality metrics, and using quality benchmarking to identify and resolve error patterns.

We have developed a robust quality benchmarking approach that we leverage across the lifecycle of custom concept model development and inferencing. The main principles of our approach are: 1) measurement of a core suite of metrics; 2) evaluation across varying data distributions; 3) expert adjudication of semantic accuracy; and 4) holistic lifecycle assessment. In the sections below, we illustrate how we put these principles into practice across different types of quality validation and quality measurement strategies.

Types of quality validation

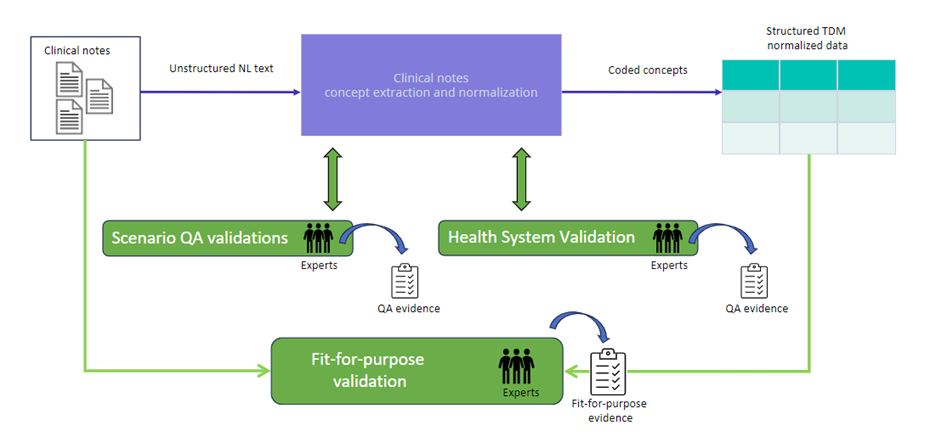

Our quality benchmarking framework has three complementary types of quality assessment: 1) scenario validation; 2) healthcare system validation; and 3) fit-for-purpose validation. Scenario validation evaluates model performance across the full data distribution, ensuring consistent quality at scale. Healthcare system validation comprises assessment stratified by member healthcare system source, recognizing that data from different sources can exhibit distinct characteristics that impact model performance. Fit-for-purpose validation focuses on specific business requirements, measuring performance against precise quality thresholds defined by use case and regulatory needs. Together, these approaches provide comprehensive quality assurance while addressing the unique challenges of each data source and customer requirement.

Fig. 1. An overview of Truveta’s quality benchmarking framework, which employs three main types of validation: 1) scenario validation; 2) healthcare system validation; and 3) fit-for-purpose validation.

Scenario validation

Every model designed to extract data from clinical notes is validated as a precondition of running inference at scale. For this type of assessment, we calculate quality metrics on representative validation and blind sets. Our AI development team participates in the assessment of the validation set but remains fully blind to model performance on the blind set to ensure these measurements reflect generalized performance. We take several steps to guarantee the statistical validity of these quality metric estimates.

We draw the validation and blind sets randomly and concurrently from the entire universe of relevant patient notes in order to ensure they have the same distribution. The distributions of these sets match the distribution of scenario notes across contributing healthcare systems. We also ensure these sets are representative across note types, such as progress notes, discharge summaries, lab results, and history and pathology notes. These steps ensure each set is representative of the distribution of notes we will run inference on when the model graph is deployed.

We draw validation and blind sets of the same size. We estimate the sample size needed to yield sufficiently low error margins based on priors of typical model performance in similar scenarios, training set size and project complexity. Complexity scales with the depth and breadth of the tree of concepts to extract.

When selecting notes for quality assessments, we stringently exclude notes the model was exposed to in training or any previous assessment. Further, we exclude notes from any patient whose notes were included in training or any previous assessment. This is because practitioners often copy text between notes for the same patient, and testing the model on these notes may artificially inflate its quality metrics.

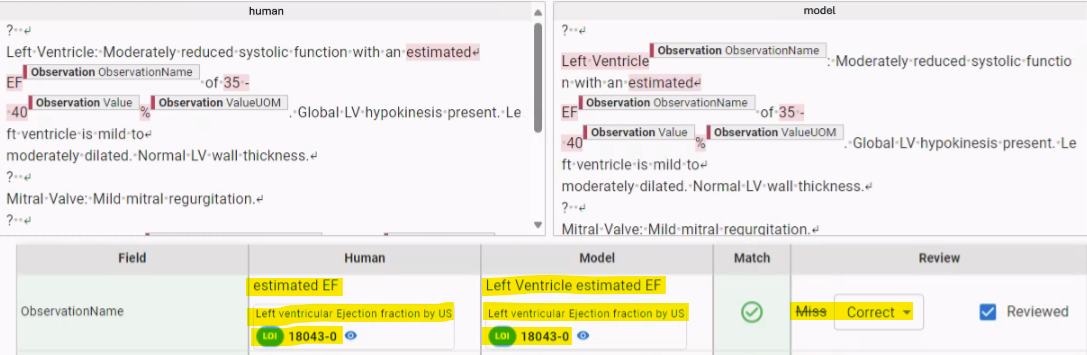

After drawing the test sets, human experts annotate them. We assess overall quality on these sets using a proprietary notes benchmarking platform, which allows our experts to compare model predictions to human-annotated ground truth. The platform enables validation of the entire normalized concept record including the concept name, attributes, dates, values, and units of measure. It facilitates adjudication of cases when model extractions and human annotations do not perfectly match but mean the same thing. For example, when extracting data from echocardiogram reports, we saw that human annotations and model extractions sometimes yielded different text strings, e.g. “estimated EF” and “Left Ventricle estimated EF” (see Fig. 2.). However, they could be normalized to the same code and matched semantically, e.g. “Left ventricular Ejection fraction by US”. As the expert evaluates each prediction for semantic accuracy, the platform aggregates counts of true positive, false positive, and false negative predictions. The platform stores quality measurements in association with the test sets and model version so that we can track quality across model maintenance.

Fig. 2. During scenario validation, we compare human annotations to model extractions in the context of the original clinical note. Sometimes these differ, but have the same meaning, as in this example; ”estimated EF” and ”Left Ventricle estimated EF” are both normalized to ”Left ventricular Ejection fraction by US”.

Healthcare system validation

In Jay Nanduri’s quality overview blog, he noted that how healthcare systems present their data to us can vary across healthcare systems and over time—enough to warrant healthcare system-specific quality validation. Here we provide more detail about this type of validation.

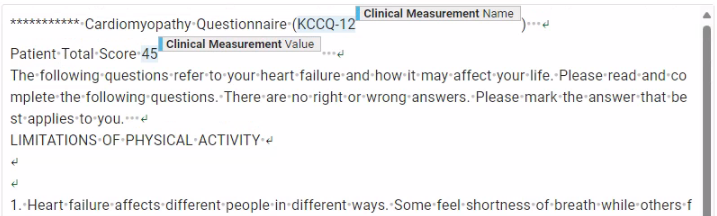

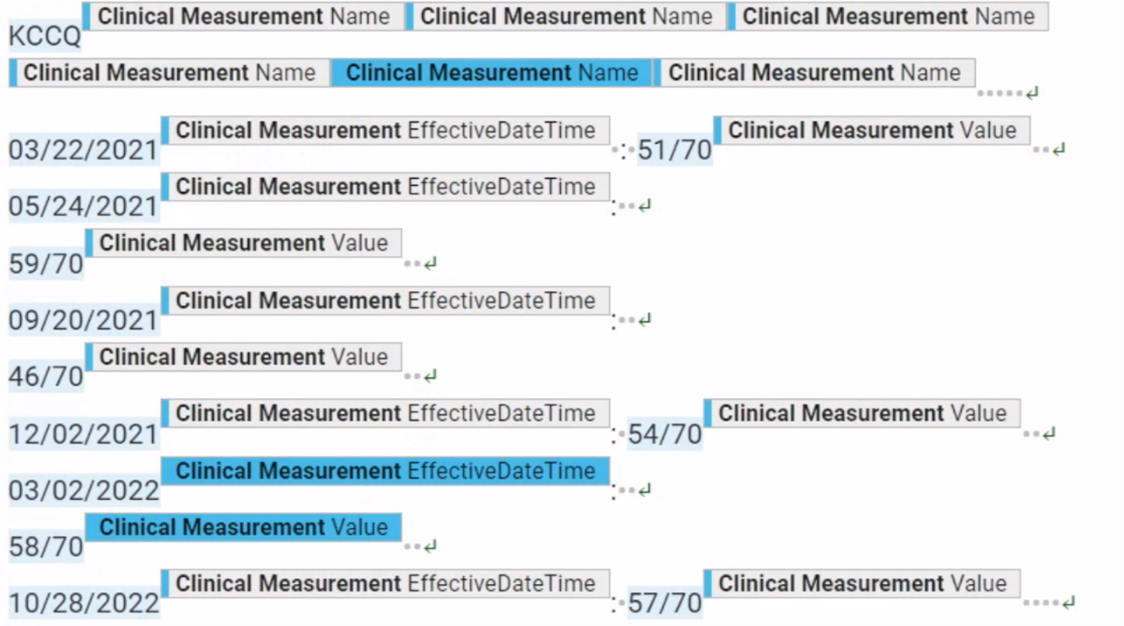

We monitor potential model performance variation across member healthcare systems at each stage of model development and inference. We have found that healthcare systems use different clinical note formats. Notes can contain consistent patterns unique to their source healthcare system that models must “understand” to yield accurate extracted concepts. For example, for a recent study of heart failure, we extracted cardiomyopathy questionnaire (KCCQ) score data (see Fig. 3.). Overall scenario validation yielded good model performance, but when we stratified performance across member healthcare systems, we saw substandard performance for one healthcare system. In most healthcare systems, we found notes that documented KCCQ scores somewhat like the one shown in Fig. 3.

Fig. 3. For a recent study of heart failure, we extracted cardiomyopathy questionnaire (KCCQ) score data. An example of how the score was typically reported in clinical notes.

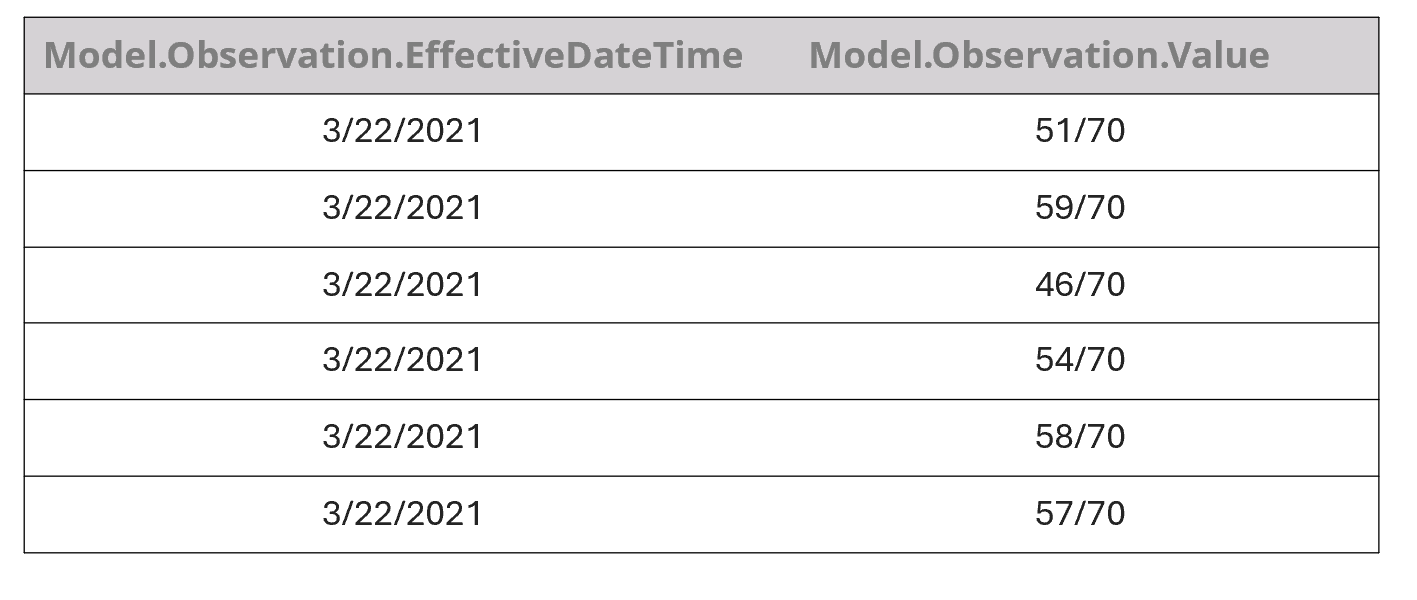

Fig. 4. An example of a format for reporting KCCQ score data we found in clinical notes from only one healthcare system.

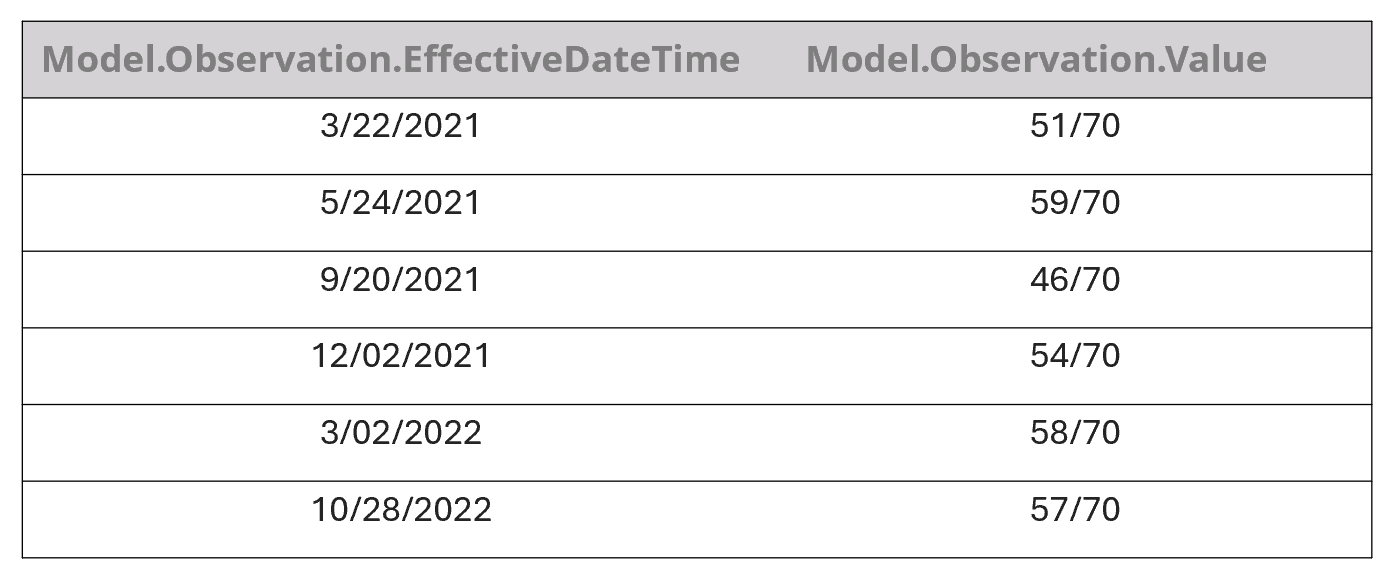

Table 1. An example of the way our model initially incorrectly extracted KCCQ scores from the note shown in Fig. 4 for a single date. It then continued to associate every value with every date.

Table 2. An example of the way our model correctly extracted KCCQ scores from the note shown in Fig. 4. after we trained it with additional data.

Onboarding of new healthcare systems

Due to potential healthcare system-specific data format variation, when we onboard a new healthcare system to our infrastructure, we validate existing models on the new data to be sure quality meets our standards. If we detect uneven quality, we create training data from the new embassy and use this to train the model to handle any new patterns. In short, this process ensures models are embassy agnostic and can ultimately run on data across our member healthcare systems.

Fit-for-purpose validation

We perform custom validation upon client request to assess the quality of data for a particular study per client expectations. This type of evaluation can take many forms, depending upon client requirements. For example, we measure the precision and recall of patient inclusion methodology by comparing the result of our engineering systems’ inclusion process to that of human experts. We also generate fine-grained quality metrics that focus on subsets of data; for example, where a certain set of values is observed for a certain measurement. This allows our clients to scrutinize the accuracy of the data as it supports key research outcomes. Human experts determine whether quality measurements are within an acceptable range for the research study being envisioned, which is highly dependent on the specific nature of the client research question.

Measurement

Our measurement approach builds upon standard AI metrics and sampling practices while incorporating targeted validation strategies driven by business priorities. We carefully select metrics based on their relevance to customer needs and regulatory requirements and make them available to our customers for their studies. We also deliberately oversample critical edge cases and rare scenarios to rigorously validate model performance where accuracy matters most.

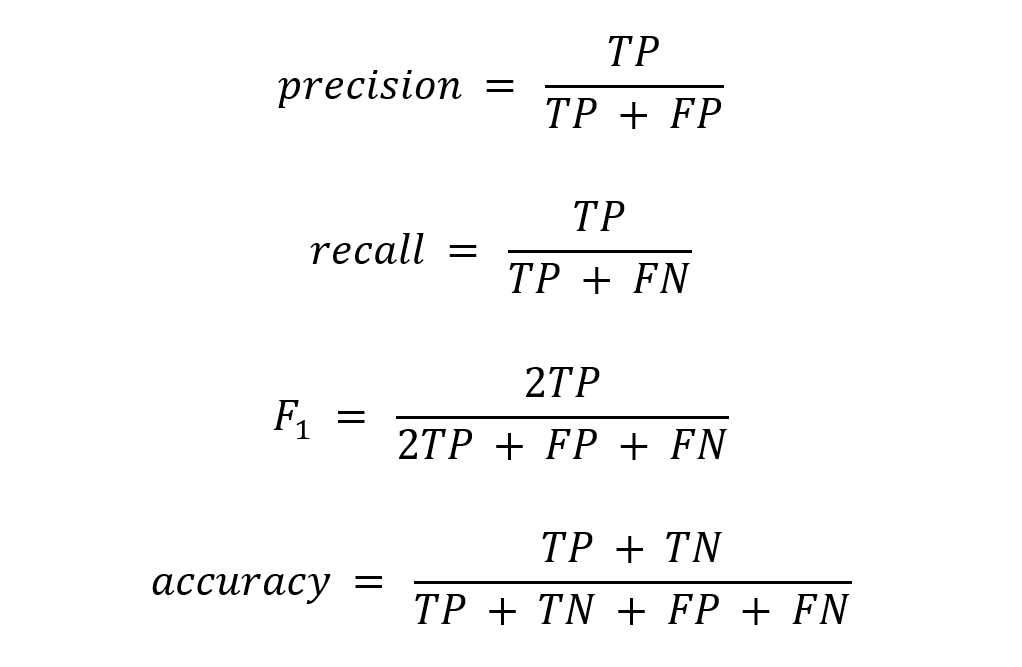

Quality metrics

We use metrics including precision, recall, and F1 score to gate the production deployment of all models, including research scenario-specific models and model graphs. As a standard practice, we calculate 95% confidence intervals for measured metrics based not only upon the assumption that the underlying distribution of the metric is normal, but also using the more conservative Chebyshev’s Inequality, which does not rely on this assumption. We verify that quality metrics are acceptable according to benchmarks that we have devised based on comparable results in the literature.

In general, we prioritize precision. That is, we maintain a high bar for precision and strive to achieve as much recall as possible. This is because researchers will treat the extracted and normalized concepts as factual knowledge in their research, and a lack of precision can directly affect their conclusions. In contrast, low recall has a more indirect effect. It may reduce the power of their analysis, and in some cases, induce a bias by not fully recalling certain cohorts within the population. So, we typically set the confidence threshold to achieve at least human-expert-like precision. We opt to send moderate to low-confidence cases to expert humans for their evaluation and use their annotations for active and reinforcement learning of the AI models.

Where TP = true positives; FP = false positives; TN = true negatives; FN = false negatives.

Long tail validation

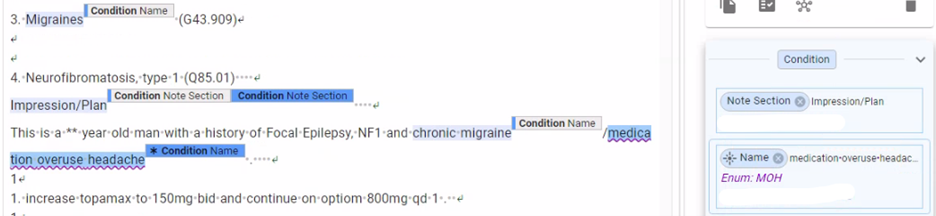

We validate scenario model performance across varying data distributions and continuously across the model lifecycle. We remediate any issues by creating additional training datasets that oversample these concepts. For example, while training a model to extract data about migraines, we initially observed substandard performance on certain secondary headache disorders of interest to our customer such as medication overuse headaches (see Fig. 5). By searching for key terms, we selected additional clinical notes that mentioned these concepts. We then annotated them, trained the model with them, and improved the model’s precision and recall of these key concepts.

Fig. 5. An example note containing the medication overuse headache concept. When we saw substandard model performance in extracting this concept, we were able to generate additional training data and improve the model’s precision and recall.

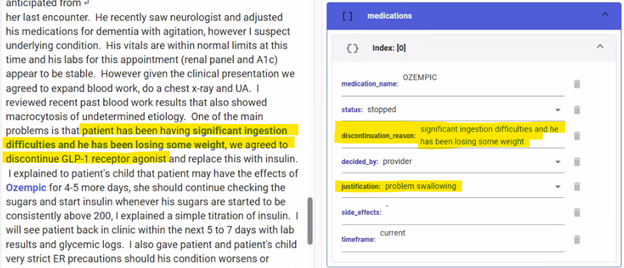

Fig. 6. An example of an incorrectly extracted discontinuation reason. Here, the reason for discontinuing the injectable GLP-1 medication was extracted as “problem swallowing”. After identifying this error, we sampled and annotated additional training data to improve model performance.

Clinical validity prior to release

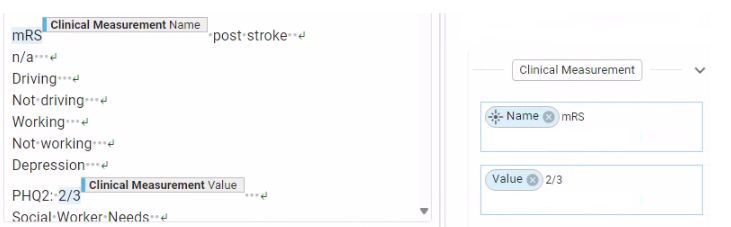

Before we release data extracted at scale, we check that the data are clinically valid, such that a researcher can make analyses confidently. We leverage an internal framework that allows us to author and monitor tests of clinical validity and report and remediate issues. For a recent neurovascular study, this process revealed an issue with values the model had extracted for modified Rankin Scale (mRS). The clinically valid range of values for this measurement was 0-6, but our quality assessment revealed that 0.8% of the data was outside these bounds. For example, from the note shown in Fig. 6, the model incorrectly extracted the value of “2/3” for mRS. With our clinical validity testing, we were able to catch and remediate this issue before production release.

Fig. 7. An example of an incorrectly extracted value for the modified Rankin Scale (mRS) measurement. We identified this error via clinical validity tests and were able to remediate this issue before production release.

Continuous monitoring post release

After we release scenario models, we continue to monitor them by periodically re-running the quality assurance process to check for degradation in quality due to drift in data distributions. This drift can happen for multiple reasons: organic changes in the real world, new patient populations getting added to the system, changes in Truveta Data Model schema, and changes in the way healthcare systems present the data to us. Our continuous monitoring framework involves sampling current data distributions at regular intervals, reporting results, and remediating issues.

Conclusion

Our AI quality benchmarking framework goes beyond statistical calculation, embodying a nuanced approach that grounds quantitative metrics in rigorous methodological practices. We leverage statistical techniques to estimate quality parameters with precision, but our true insights emerge from contextual interpretation, domain expertise, and an evolving understanding of model capabilities. This approach transforms raw numerical estimates into meaningful assessments that capture the complex, dynamic nature of AI, ensuring that the data extracted meets the highest standards of quality and regulatory compliance.