In a new peer-reviewed study published in Radiology Advances, Truveta scientists developed and validated a multimodal deep learning model using chest radiographs and de-identified electronic health records from Truveta Data. By combining imaging with commonly available clinical variables, the team demonstrated that the AI model can estimate body composition metrics at scale with greater precision than BMI alone. The deep learning model is available as a Python library for others to experiment with in Truveta’s GitHub.

What the study shows

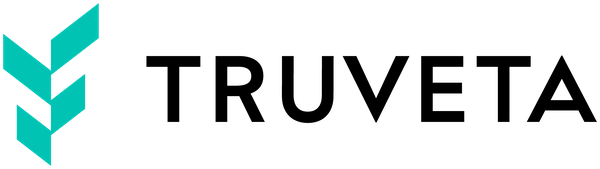

Using Truveta Data, researchers trained and tested a multimodal model on more than 1,100 patients who had both chest radiographs (X-rays) and abdominal CT scans. By combining imaging with common clinical variables (age, sex, height, and weight), the team tested whether the AI model could predict fat and muscle volumes usually captured by specialized body composition scans.

Key findings from the analysis include:

- Multimodal AI outperformed single-source models. The late fusion multimodal model achieved the best performance across nearly all body composition measures, significantly outperforming imaging-only and clinical-only approaches.

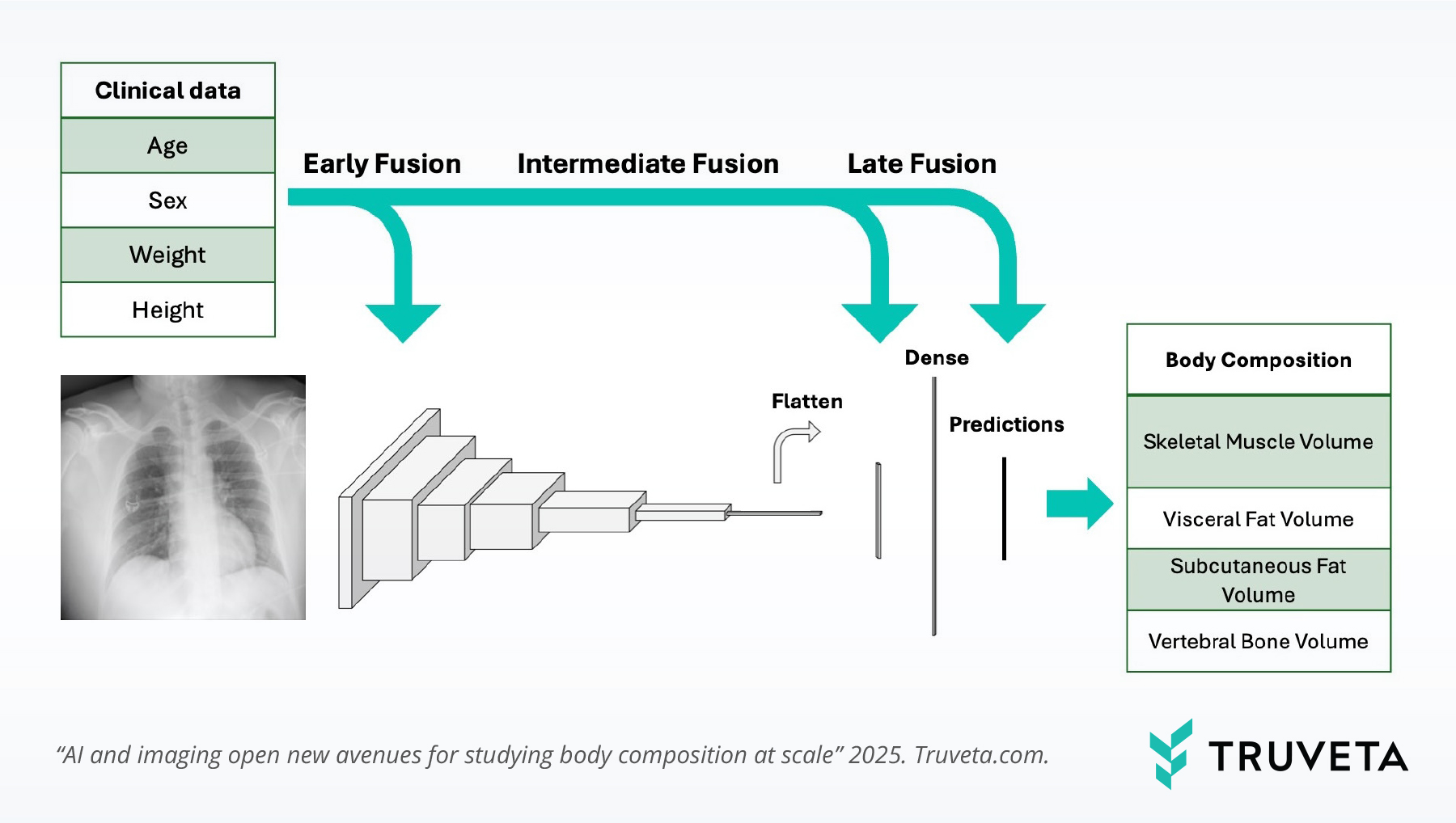

- Subcutaneous fat was most accurately predicted. The model reached a Pearson correlation of 0.85 for subcutaneous fat volume.

- Visceral fat was well estimated. The model achieved a correlation of 0.76 for visceral fat—a critical measure for cardiometabolic risk.

- Skeletal muscle prediction was more limited. While performance was moderate, the model still outperformed clinical-only models.

Why it matters

Body composition provides a stronger predictor of health outcomes compared to BMI or weight alone. Yet large-scale use has been limited by the need for advanced imaging like CT or MRI. This research shows how multimodal AI, pairing chest X-rays with structured clinical data, can provide a scalable and less invasive alternative.

With this approach, researchers can:

- Scale body composition research across large, diverse populations without requiring costly or invasive imaging.

- Explore how fat distribution relates to outcomes such as cardiovascular disease, diabetes, or cancer.

- Enable new insights for clinical trials and innovation, where patient selection and phenotyping often depend on body composition metrics.

Looking ahead

By showing that chest radiographs and EHR data can reliably estimate fat distribution, this study lays the foundation for future research that requires accurate, scalable body composition measures. The findings highlight the power of multimodal AI models and their potential to help answer new questions about health, disease risk, and treatment response that BMI alone cannot address.

Answers can’t wait. Let’s talk today.